What was the first thing you did this morning? What about the last thing you did before going to bed? Or on your lunch break? Waiting in line? In between meetings?

My guess is, like most of us, you were on your smartphone. All of those times you innocently pick up your phone, you leave a digital footprint and bits of data that are analyzed and dictated by algorithms.

Algorithms are a form of artificial intelligence that are step-by-step instructions a computer follows to perform a task. The algorithm combs through data to make correlations and predictions, often more accurate than a human. It is how Netflix offers you suggestions on what to watch, and how you get ads on Facebook for that product you were just thinking about buying.

These algorithms have a dark side. Called “algorithms of oppression” by Dr. Safiya Umoja Noble or “The New Jim Code” by Ruha Benjamin, these algorithms reinforce oppressive social relations and even install new modes of racism and discrimination.

Called 'algorithms of oppression' or 'The New Jim Code,' these algorithms reinforce oppressive social relations and even install new modes of racism and discrimination. Click To TweetBecause algorithms are trained on past data and decisions, they will repeat behaviors. For example, BIPOC individuals have historically faced discrimination in getting access to lines of credit and capital, so when banks use algorithms to determine the creditworthiness of individuals, they are more likely to repeat discrimination. If there is a history of gender bias at a company, the algorithm that sorts through resumes will be more likely to reject women applicants. U.S. courts and law enforcement sometimes use ‘predictive policing,’ which can determine everything from bond amounts to who is “likely to commit another crime,” to how long an individual is going to spend in prison. Because the U.S. criminal justice system has a long history of being unjust (to say the least) to Black Americans, the algorithms are reinforcing that data. A 2016 investigation by ProPublica even found that the software and algorithms being used in courts rated Black people as a much “higher risk” of committing a crime than white people.

To put it simply, we are automating our bias.

The way that most of us will experience this, and have the power to do something about it, is on social media. The platforms have algorithms to flag and stop the spread of hate speech and abusive content. Because of the bias built into these algorithms (consciously and/or unconsciously), instead of “removing and reducing” problematic content, they often end up doing more harm to marginalized groups.

Because of the bias built into these algorithms, instead of 'removing and reducing' problematic content, they often end up doing more harm to marginalized groups. Click To TweetThe Markup is a nonprofit newsroom that investigates how institutions are using technology to change our society. Earlier this year, they performed an investigation into how Google and YouTube are blocking social justice-related content. These platforms have brand safety guidelines that prevent ads from running harmful content, meaning that a neo-Nazi group can’t make money from advertisers on a YouTube video they put out. This is great, right?

Unfortunately, The Markup found that in Google’s guidelines, “Black Lives Matter” and “Black power” were blocked phrases but “white lives matter” and “white power” were not. Discrepancies like this were fixed, but Google’s spokesperson declined to answer questions related to these blocks. Google later quietly blocked 32 more social and racial justice terms, including “Black excellence,” “say their names,” “believe Black women,” “BIPOC,” “Black is beautiful,” “Black liberation,” “civil rights,” “LGBTQ+,” and more.

TikTok had guidelines that excluded creators with “ugly facial looks,” “abnormal body shape” and “too many wrinkles” because it would “decrease the short-term new user retention rate.” The platform said those guidelines were an attempt to prevent bullying but were no longer in use. Twitter’s algorithms have come under investigation as well, and a study in 2019 found that tweets written by Black Americans were twice as likely to be flagged as offensive compared to others.

Instagram is perhaps where we hear the most about social media algorithms privileging whiteness and discriminating against people of color. Last summer, Black plus-size model Nyome Nicholas-Williams had her photos removed on Instagram for nudity, even though she was clothed. She argues that “When a body is not understood, I think the algorithm goes against what it’s taught as the norm, which in the media is white, slim women as the ideal.” A project was created earlier this year called “Don’t Delete My Body,” calling on Instagram to “stop censoring fat bodies.” Kayla Logan, one of the creators of the project, said, “There’s a bot in the algorithm, and it measures the amount of clothing to skin ratio, and if there’s anything above 60%, it’s considered sexually explicit…. It’s inherently fat phobic and discriminatory.”

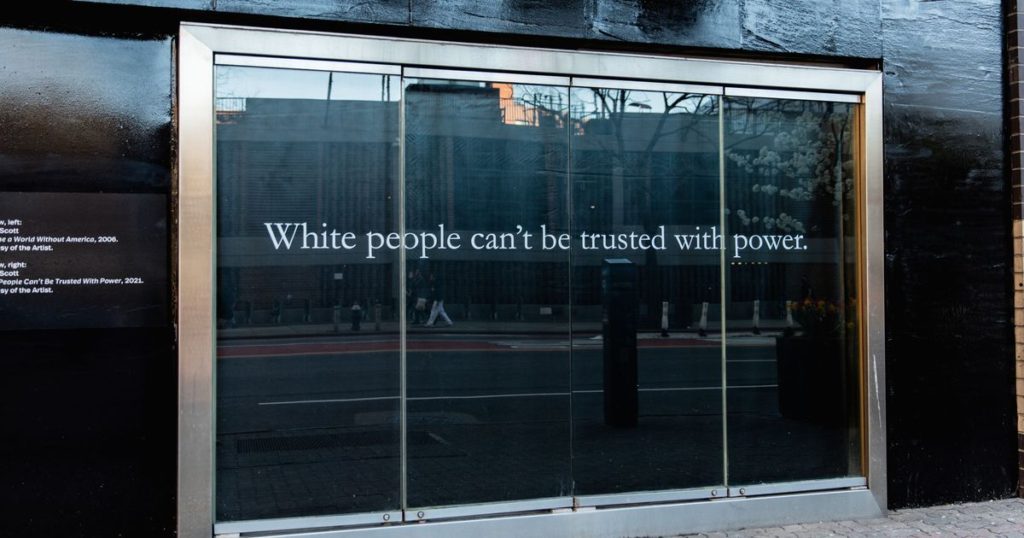

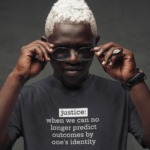

Dread Scott, an artist whose works focus on the experience of Black Americans, has had his artwork removed from Instagram multiple times. His most recent art installation read, “White people can’t be trusted with power,” along with “Imagine a world without America.” When Scott posted images of the works on Instagram, they were promptly removed as “hate speech.” In response, Scott made a new post, reading “White people’s algorithms can’t be trusted with power,” which, predictably, was also quickly removed.

In my role with The Winters Group, I am responsible for our marketing, which includes managing our social media accounts. Our Instagram account frequently gets flagged and ‘shadow banned.’ This is a form of censorship where the account and the images are not deleted from the platform, but they are hidden from users and not shown on “explore” pages or in hashtags. You are not notified when you are shadow banned; in fact, Instagram won’t acknowledge that this even happens, but it’s pretty easy to figure out. Some weeks we get hundreds of thousands of views, but when we’re shadow banned, that number plummets into the mere thousands. Our content is flagged for use of terms like “anti-racist,” “dismantle,” “white supremacy,” “white fragility,” “decolonize,” “reparations” …which is just about every conversation we’re having.

Instagram’s prejudice in its algorithm is well-documented, and although this may not impact the casual user directly, many people rely on Instagram to make a living — artists, bloggers, influencers, musicians, small business owners, independent business consultants, even DEIJ practitioners. Given that one of the primary purposes people use Instagram for is to grow their business, its biased algorithm is sending clear messages about what kinds of bodies and identities are fit for consumption.

So, what can we do about it? How can we stop automating bias online?

- The easiest and most effective way that you can combat algorithms of oppression is to support marginalized creators on social media. The more you engage with a social media account, the more the algorithms will show you their content. But based on how the algorithms work, you might not organically come across accounts from creators with marginalized identities. Seek them out and support them. Follow hashtags to find new accounts, like #BlackWomanOwned or #TransArtists. Turn on post notifications for your favorite creators to be notified when they make a new post. Engage with their content, share it with your friends, leave words of support and encouragement. Unfollow all those meme pages to make space on your feeds for those who need it. For many people, social media is how they make their living – selling art, promoting music, finding clients – and they need you to help amplify their business. As Brittany J. Harris previously wrote in this series, “Amplification is one way we shift power.”

- If you’re a creator experiencing censorship and shadow banning on social media, change how you type the words and phrases. Instead of typing “white supremacy,” try “whyte supremacy” or “wh*te suprem*cy.” The algorithms don’t pick up on that as easily. It is frustrating to have to censor your own content on social and racial justice topics, but these platforms are too big of a resource and form of education to not use. Rather than boycott social media platforms, we can reclaim them.

- Hire women and people of color to tech roles. Who is developing these codes matters. At Facebook, just 4% of the roles are held by Black employees and 6% by Hispanic employees. At eight large tech companies, only 1/5 of the technical workforce at each were women, according to a Bloomberg evaluation. Our digital world for the most part has been created by and for cis, straight, white men. When they are the only ones writing the algorithms, they embed their biases into the programs.

- If you’re at an organization involved in the creation and/or commercialization of algorithms and other artificial intelligence, you can request an algorithmic audit with the Algorithmic Justice League.

- Start exploring how your data is being handled. An equitable digital world requires that people have agency and control over how they interact with these systems. To do that, people must be aware of how these systems are being used and who owns the data.

Here are a few resources to get started: - The Algorithmic Justice League created by Joy Buolamwini

- Algorithms of Oppression: How Search Engines Reinforce Racism by Safiya Umoja Noble

- Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy by Cathy O’Neil

- Design Justice: Community-Led Practices to Build the Worlds We Need by Sasha Constanza-Chock

- Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor by Virginia Eubanks

- Artificial Unintelligence: How Computers Misunderstand the World by Meredith Broussard

- Race After Technology: Abolitionist Tools for the New Jim Code by Ruha Benjamin

As a marketer, I truly believe in the power of social media. We can create corners of the internet that are safe and affirming. We can spread education to hundreds of thousands in a matter of minutes. We can build community and share stories with people we would never have met otherwise.

With every click, every like, every share, every Tweet, you have the power to operationalize justice, shift power and create the digital world you want.

With every click, every like, every share, every Tweet, you have the power to operationalize justice, shift power and create the digital world you want. Click To TweetUltimately, the algorithms are learning by looking at the world as it is — not as it should be. That part is up to us.

I’ll leave you with this quote from Ruha Benjamin: “Remember to imagine and craft the worlds you cannot live without, just as you dismantle the ones you cannot live within.”